Data archives from NASA’s Earth Observing System Data and Information System (EOSDIS), which collects data from satellites, aircraft, and ground instruments, currently contain about 31 petabytes (PB) of data. That’s 31 followed by 15 zeros, or 31 million billion bytes. Within three years, the archives are expected to hold more than 150 PB, and keep adding nearly 50 PB every year.

“Now we have so much raw data. So how do we analyze it? How do we make it useful for the research community?” asks Jianwu Wang, assistant professor of information systems and affiliated faculty at UMBC’s Joint Center for Earth Systems Technology (JCET), a partnership with NASA.

While Earth scientists are encountering this glut of satellite data, researchers in computing fields are rapidly increasing the capabilities of artificial intelligence and machine learning technologies. At the same time, there is an increasingly urgent need to better understand Earth’s systems as they shift due to climate change.

All of these factors drove Wang and his collaborators to find ways to help researchers access useful information collected by Earth-observing satellites much faster. A new $1.4 million award from NASA’s Advancing Collaborative Connections for Earth System Science (ACCESS) program will make their work possible.

Taking computers to cloud school

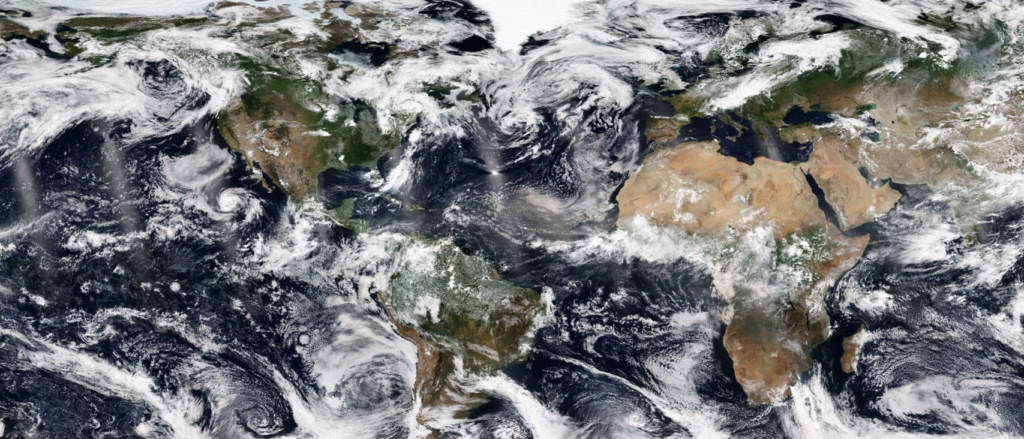

The ACCESS project focuses specifically on improving how algorithms process and learn from the data satellites collect about clouds. At any moment, clouds cover about two-thirds of Earth’s surface, and yet understanding of their role in global climate is still lacking. Zhibo Zhang, associate professor of physics and a co-PI on the project, and his research group have been working to enhance knowledge about clouds’ role in regulating the global energy balance and precipitation.

To understand how clouds work in the global system, scientists need the data that instruments orbiting Earth on satellites collect. But the data needs some analysis before it’s useful. For example, when an instrument in a satellite looks at the Earth, it can detect things like brightness and color. But it can’t decide if it’s looking at a cloud or a clear sky. That’s the job of computer algorithms that scientists apply to the data after it’s collected.

Clouds can vary greatly in their appearance, so the computer needs to learn what different kinds of clouds look like. That way it can report “cloud” when its data meet the definition. That process of teaching the computer to learn from examples is called “machine learning.”

To train the computer algorithm, researchers feed the computer data that’s already labeled as “cloud” or “not-cloud.” Eventually, the computer learns to tell the difference on its own, and can report accurately whether an image it’s never seen before is a cloud or not. A good algorithm can learn to tell the difference between a cloud, smoke, dust, and other kinds of particles found in the atmosphere.

It’s all connected

One goal of the new project is to generate these training data sets. At the most basic level, it is somewhat similar to asking humans to complete captchas asking them to “click the boxes that include clouds,” but millions of times, and with significant added challenges and complexity.

For example, clouds cast shadows on each other and interact in other ways. So when the computer is trying to make a judgment about a given pixel in an image, it actually needs information about the surrounding pixels as well. Those interactions can extend far beyond what’s right next door. When looking at a spot in Maryland, for example, “You don’t only need to know about Maryland, you need to know about New York,” Zhang says.

To address this challenge, the team will generate numerical simulations, as opposed to direct observational data collected by the satellite, to help define in computer code the ways clouds and other particles interact with each other in the atmosphere.

Using those complex simulations, “We can know which pixels are affecting their surroundings or being affected by their surroundings. That way, we’ll have a totally connected network that we can use to train the algorithms,” Zhang says. “Even observations cannot tell us which pixel is affecting which pixel. Only numerical simulations can do that.”

Decoding the data

Another important part of their work will make it possible to transfer knowledge between two different categories of instruments. The first type, active sensors, are extremely accurate but only observe a very small portion of the sky: All of them together only watch about 10 percent of Earth’s surface. Passive sensors, on the other hand, are a little less accurate but, combined, look at nearly the whole globe.

“These sensors collect different kinds of data,” and all of it is valuable, says Sanjay Purushotham, assistant professor of information systems and another co-investigator on the project. A major challenge for the team is coming up with algorithms that allow computers to use all of the available data—from both kinds of sensors—to define clouds and their interactions in ways a computer can understand.

“You cannot use any off-the-shelf machine learning or deep learning model to solve this problem,” Purushotham says.

The magic of AI

All of this algorithm development takes a lot of resources and human energy. However, the team is working to automate some parts of the process. Right now, “It’s always difficult to duplicate an algorithm designed for one instrument for other, similar instruments, or even for the same instrument on a different platform,” explains Chenxi Wang, a co-PI on the project and an assistant research scientist with JCET. “Even subtle changes in the instrument or the platform’s orbit can cause the original algorithm to fail.”

“You have to develop almost a brand new algorithm,” C. Wang adds. “You have to adjust parameters, check the stability of the algorithm, and do evaluation… You have to do everything again. And that can take from six months to several years.”

C. Wang hopes to help the computer learn to do the translations itself, based on an understanding of the fundamental physics. All a human would have to do is give the program certain parameters about the instrument and the satellite it’s traveling on.

“I think that’s the magic of machine learning and artificial intelligence,” says C. Wang. “The hope is that instead of years, it will take only a few days or at most a week. It will save a lot of time and resources.”

“We’re developing this process so it can be universal and applied to any instrument,” adds Zhang. “It’ll liberate some scientists from repeating the same things again and again to fine-tune the algorithms.” It will also get data to scientists like him much faster. As he notes, “If you have to wait for many years to get that useful data, it’s harder to make progress.”

The power of partnership

UMBC’s long-term partnership with NASA has helped make this project possible. “The special connection between UMBC and NASA through JCET has definitely prepared us better for this kind of proposal,” Zhang says.

In addition, a confluence of advances has given fresh impetus to this kind of work. For one thing, demand for climate data is on the rise, given the increasing visibility of the climate emergency. “Cloud observation is a high priority for NASA today. No one knows just how much clouds are contributing to climate change and other things,” J. Wang says. “That’s why we chose this topic, because it’s so important to understand Earth.”

Parallel advances in machine learning and data collection further fuel the effort. “Even two or three years ago we couldn’t have done this,” J. Wang reflects.

In the end, though, it comes down to collaboration. Each member of the team of data scientists and atmospheric physicists brings a unique perspective and knowledge base.

“We have good synergy among the team members, so we can speak the same language even though we come from different disciplines,” Purushotham says. “That helps us understand what the real problems in the data are, and what innovations we need to solve them.”

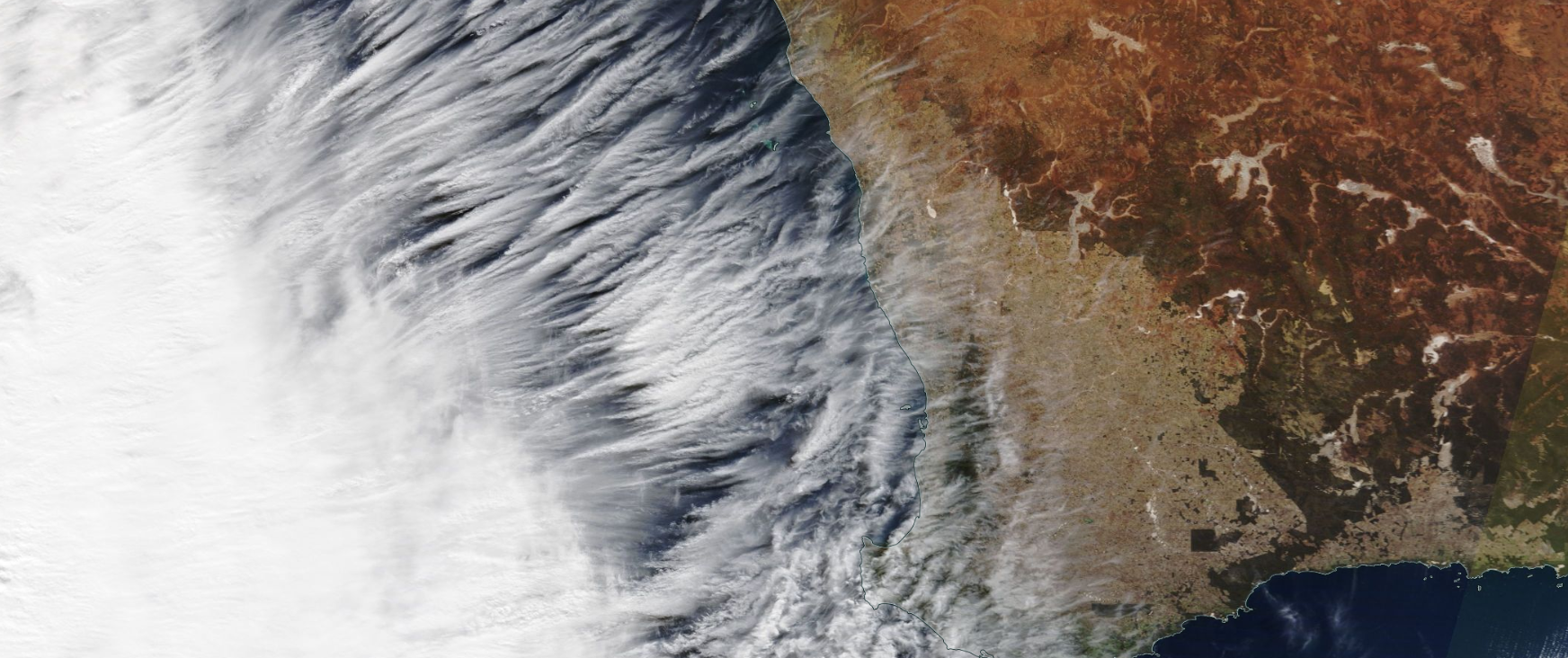

Banner image: The VIIRS instrument captured this image of bands of cirrus clouds off the southwest coast of Australia in 2019, which portend intense weather. Photo: NASA.